This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Business News Labels & Publishers Legal Top Stories

Music industry organisations back Human Artistry Campaign putting the spotlight on generative AI debates

By Chris Cooke | Published on Friday 17 March 2023

A plethora of organisations representing music and other creative disciplines, in the US and beyond, yesterday backed what is being called the Human Artistry Campaign, with the aim of ensuring “artificial intelligence technologies are developed and used in ways that support human culture and artistry, and not ways that replace or erode it”.

Formally launched with a panel at South By South West in Texas, the new campaign follows the recent spike in interest in creative or generative AI technologies, ie AI tools that can generate original content by crunching a load of data linked to existing content. That increased interest has been caused by the hype surrounding various generative AI products, ChatGPT in particular.

And in music more specifically we’ve had Tencent bigging up its AI that can automatically create new vocals for dead artists; Spotify launching an AI-powered personalised DJ to chat away at its users; and David Guetta employing AI tools to both write and record a new Eminem track. You know, without having to bother the brain or voice of Eminem.

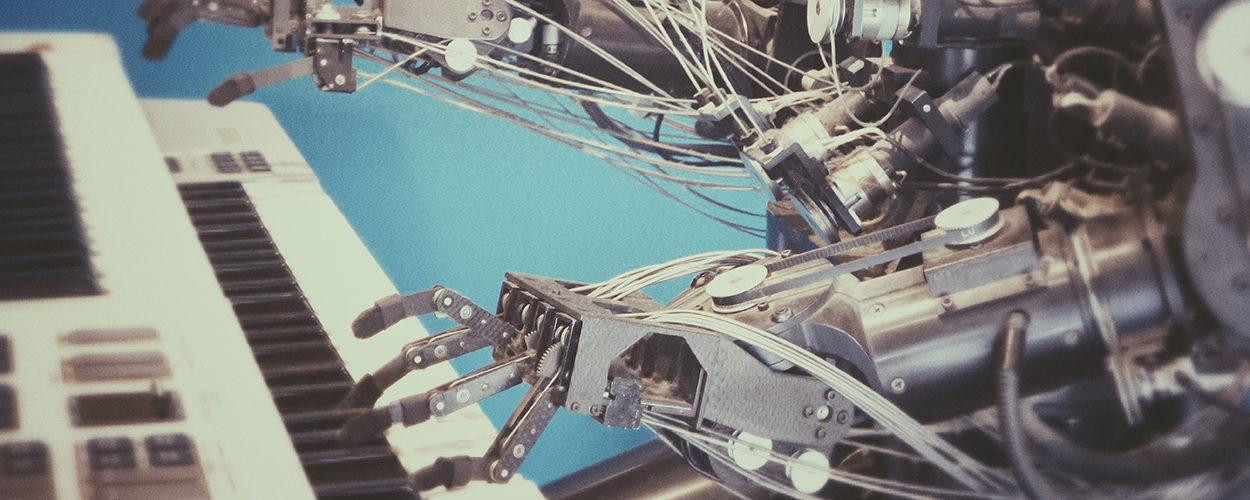

Just like with any new tech, but possibly more so, generative AI creates both opportunities and challenges for the music and wider creative industries, especially as that tech gets ever more sophisticated.

Music-makers can use such tech as part of the music-making process and to help them create the ever increasing stack of other content that is now involved in building and engaging a fanbase as a frontline artist. However, AI tools that make it quick and easy to compose, produce, record and master new music can quickly become a competitor of both creators and companies in the music business. Most likely initially in production and mood music, but ultimately more widely.

A set of fundamental principles published by this all new Human Artistry Campaign yesterday begins by acknowledging the opportunities, keen to stress that it’s not some sort of luddite movement. The music community, it notes, has long embraced all sorts of new technologies in the music-making process, and “AI already is and will increasingly play a role as a tool to assist the creative process, allowing for a wider range of people to express themselves creatively”.

“AI has many valuable uses outside of the creative process itself, including those that amplify fan connections, hone personalised recommendations, identify content quickly and accurately, assist with scheduling, automate and enhance efficient payment systems – and more”, it adds. “We embrace these technological advances”.

But then there are the challenges too, both legal and ethical. In legal terms, generative AI throws up a bunch of copyright questions. Two in particular. First, should content created by generative AI enjoy copyright protection, just like content created by humans? And also, do the makers of an AI technology need licences from existing copyright owners when training their tech through the mining of data related to existing content?

In the US, where there is a copyright registration process, the first question is being tackled by the US Copyright Office that handles the registrations. It has previously declined to allow an AI-created artwork to be registered. More recently it did allow the registration of a graphic novel with human created words and machine created images, but said that the individual images were not protected by copyright in isolation because of the lack of human involvement in their creation.

Yesterday the Office announced a review of all this, while also setting out its current position on whether or not AI-created works can be registered. Basically, copyright registrations will be accepted for works where a creator employed AI technologies but still led the creative process. However, registrations will be rejected where the creator merely provided ‘prompts’ to the AI.

The Office said in a statement: “If a work’s traditional elements of authorship were produced by a machine, the work lacks human authorship and the Office will not register it. For example, when an AI technology receives solely a prompt from a human and produces complex written, visual, or musical works in response, the ‘traditional elements of authorship’ are determined and executed by the technology – not the human user”.

Of course, every country has its own copyright rules. In the UK, copyright law does provide protection to “computer-generated works”, and a recent review by the Intellectual Property Office decided that that rule should stay in place. But in many countries the rules are not that specific, meaning there is plenty of ongoing debate over whether any one country’s copyright system could – not to mention should – protect AI-generated works.

As for what licences AI makers need when training their technologies, that also depends on what each country’s copyright laws say.

In the US some argue that data mining – or at least some kinds of data mining – is possibly protected by the always tricky concept of fair use, which would mean a licence was not always required. In the UK it was recently proposed by the IPO that a specific copyright exception should be introduced for data mining by AI makers, though that proposal is now on hold.

On both sides of the Atlantic this particular question is set to be tested in court by Getty Images, which has sued the people behind the visual generative AI platform Stability AI in both the UK and US courts.

As for the Human Artistry Campaign, it is adamant that any data mining needs to be licensed, and that entirely AI-created works should not enjoy copyright protection. “Use of copyrighted works, and use of the voices and likenesses of professional performers, requires authorisation, licensing, and compliance with all relevant state and federal laws”, it states.

And to that end, “governments should not create new copyright or other IP exemptions that allow AI developers to exploit creators without permission or compensation”. Oh, and “copyright should only protect the unique value of human intellectual creativity”.

Elsewhere in its fundamental principles, the campaign talks about the importance of “trustworthiness and transparency”. It states: “Complete record keeping of copyrighted works, performances, and likenesses, including the way in which they were used to develop and train any AI system, is essential”.

“Algorithmic transparency and clear identification of a work’s provenance are foundational to AI trustworthiness”, it goes on. “Stakeholders should work collaboratively to develop standards for technologies that identify the input used to create AI-generated output”.

“In addition to obtaining appropriate licences, content generated solely by AI should be labeled describing all inputs and methodology used to create it – informing consumer choices, and protecting creators and rightsholders”.

On top of that, “policymakers must consider the interests of human creators when crafting policy around AI. Creators live on the forefront of, and are building and inspiring, evolutions in technology and as such need a seat at the table in any conversations regarding legislation, regulation, or government priorities regarding AI that would impact their creativity and the way it affects their industry and livelihood”.

Despite the legal and ethical challenges, the Human Artistry Campaign’s new document does also contain some optimism, believing that the outputs of human creativity will always be better than works entirely generated by the machines.

“Creative works shape our identity, values and worldview”, it says. “People relate most deeply to works that embody the lived experience, perceptions, and attitudes of others. Only humans can create and fully realise works written, recorded, created, or performed with such specific meaning. Art cannot exist independent of human culture”.

Yeah, maybe. You can read the full statement and see the list of organisations backing it here.

Oh, and in case you’re wondering whether ChatGPT thinks AI-created works should have copyright protection, well, “as an AI language model, I do not have personal opinions or beliefs”, it says, “but I can provide information and perspectives on this topic”.

“Currently, the legal framework around copyright protection for AI-created works is not entirely clear”, it goes on. “In most countries, copyright law grants protection to ‘original works of authorship’, which are typically creations of human authors. However, the question arises whether works generated by AI should be considered as original works of authorship and therefore be protected by copyright law”.

“Some argue that AI-generated works are the result of human creativity and ingenuity, and therefore, they should be subject to copyright protection”, it adds. “Others argue that because AI systems are programmed and do not possess creativity or originality in the human sense, they should not be granted copyright protection”.

“Overall, the answer to whether AI-created works should have copyright protection is not straightforward and depends on many factors”, it then concludes. “As AI technology continues to evolve and generate increasingly complex and creative works, it will be important to re-evaluate copyright laws and determine how they can best accommodate these new forms of intellectual property”.

Yeah, thanks for that ChatGPT. But I handle all the copyright chatter around here thank you very much. Well, for now at least.